Our Work

Founded in 2014, the Center on Privacy & Technology is a leader at the intersection of privacy, surveillance, and civil rights.

Latest Work

Fritz Fellow Simone Edwards Presents at Fritz Conference

The Center's 2023-2024 Fritz Fellow Simone Edwards presented, along with the Massive Data Institute team, at the annual Fritz Conference. Simone presented on her work as part of the Fritz team during the 2023-2024 school year. She discussed her work digesting documents disclosed in response to the Center's FOIA requests, as well as the strategic corporate research she conducted to learn more about the companies and individuals behind the technologies police departments were buying, specifically probabilistic genotyping programs.

Watchword Prize Winner Announced

The Center announced the winner of the inaugural Watchword Prize: “Watchman, What of the Night?” by author Michael Colonnese. The poem is posted on the Center's website and available to read. Poems were judged by acclaimed poet and Georgetown faculty Carolyn Forche.

Senior Associate Cynthia Khoo on The Signal with Adam Walsh

Senior Associate Cynthia Khoo appeared on a radio show, The Signal with Adam Walsh, CBC Newfoundland and Labrador. She spoke on the civil rights implications of artificial intelligence, including bias and abuse embedded in how AI-based tools are developed and deployed. Cynthia also emphasized factors that regulators should keep in mind when assessing the potential benefits and harms of AI, including: who is disproportionately harmed; false advertising of AI hiding human workers; and avoiding technosolutionism. Her interview was played on air for comment by the NL Information and Privacy Commissioner.

“5 Takeaways from the Privacy Center’s Community Teach-in on Algorithmic Housing Discrimination” blog

On December 15, 2023, the Center on Privacy & Technology at Georgetown Law hosted a virtual teach-in on algorithmic housing discrimination in DC. Associate Emerald Tse summarized five key takeaways on our blog. Read the whole blog here.

Faculty Director David Vladeck Publishes Op-Ed in Baltimore Sun

David Vladeck co-authored an op-ed in Baltimore Sun "You’re giving away your rights in those online contracts you don’t read" which argued that businesses are stripping away consumer rights through online contracts. The piece called on the CFPB to outlaw the use of unfair clauses in some consumer contracts.

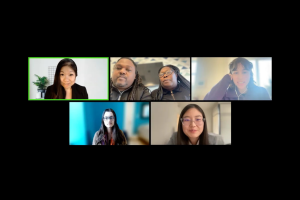

Virtual Teach-In: Algorithmic Housing Discrimination in D.C.

Senior Associate Cynthia Khoo, Director of Research and Advocacy Stevie Glaberson, and Justice Fellow Emerald Tse coordinated a virtual teach-in on algorithmic housing discrimination in DC. The teach-in featured speakers with legal and research expertise in or firsthand experiences of algorithmic housing discrimination, including: Natasha Duarte (Upturn); Susie McClannahan (Equal Rights Center); Troy and Monique Murphy (individual members of the Fair Budget Coalition), and Wanqian Zhang (3L at Georgetown Law and Student Attorney at the Communications and Technology Law Clinic).

Comments to the Department of Health and Human Services

The Privacy Center co-authored with Upturn a comment to the US Department of Health and Human Services on a proposed update to the agency’s regulations implementing Section 504 of the Rehabilitation Act of 1973, arguing that the proposed rule is incomplete because it fails to address the sites in the system where most discrimination occurs: reporting, screening, and investigation. Our comment also highlights how agencies may use algorithmic and data-driven tools that contribute to disability discrimination and provides recommendations to address discrimination at the front end of the system.

Tech Policy Press: Does ICE Data Surveillance Violate Human Rights Law? The Answer is Yes, and It’s Not Even Close

The Center’s Executive Director Emily Tucker and Clinical Fellow at the International Justice Clinic at UC Irvine Law co-authored a piece published in Tech Policy Press that highlights the United Nations Human Rights Committee's Concluding Observations that calls out how ICE’s surveillance practices conflict with human rights law and the right to privacy. Those observations echo our report co-written with the International Justice Clinic we submitted as part of their periodic review process.

Cited Report: The New Yorker

The New Yorker published an in-depth piece on technology and policing that cites our report The Perpetual Line-Up and Laura Moy's 2021 law review article.

“Meet The Team: Q&A With Emerald” blog

In November 2023, Emerald Tse joined the Privacy Center team as a Justice Fellow. Read our Q&A with her on our blog.